How to Run Advantage+ & Performance Max Without Losing Control

For Marketers Tired of Watching “Smart” Campaigns Do Dumb Things

In yesterday’s free article, we made one thing clear:

Automation doesn’t create volatility.

It accelerates whatever system you built.

This paid piece assumes you accept that premise — and answers the next, more useful question:

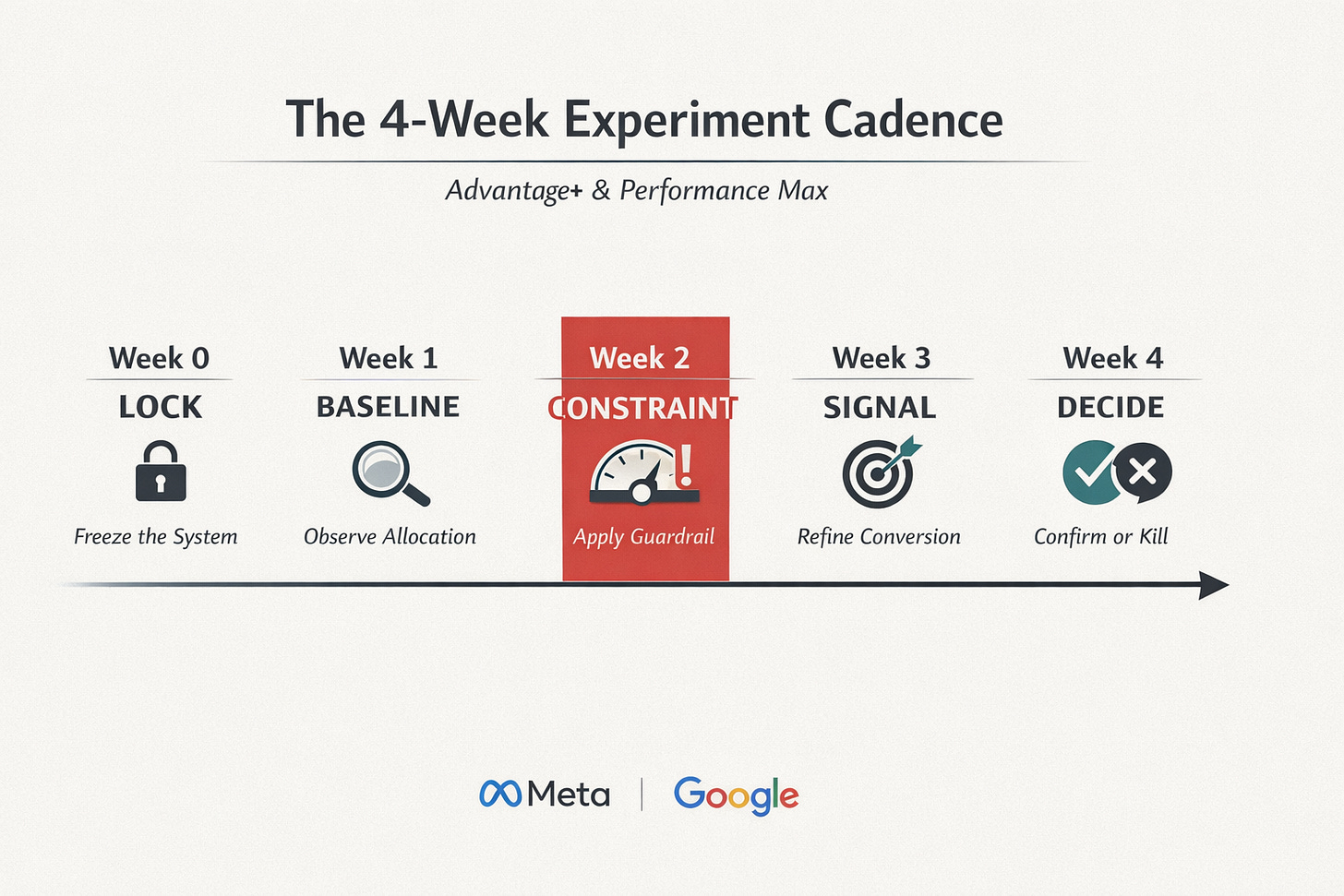

How do you design experiments that work with Advantage+ and Performance Max instead of being punished by them?

This is not about “testing more”.

It’s about testing differently — in a way automated systems can actually learn from.

Why Most Tests Fail on Advantage+ & PMax

Let’s start with the uncomfortable truth.

Most “experiments” on automated platforms fail because they violate basic system rules.

Common failure patterns:

Multiple variables change at once

Budgets fluctuate mid-test

Audiences overlap invisibly

Optimisation goals drift during learning

Results are read too early

Automation doesn’t break here.

Causality does.

Advantage+ and PMax are not campaign types.

They are allocation engines.

If you don’t constrain what they’re allowed to learn, they learn the wrong thing very efficiently.

The Core Reframe: You Don’t Test Ads — You Test Allocation Logic

Manual platforms let you test inputs.

Automated platforms test where marginal dollars go.

That means your experiment unit changes.

You are no longer testing:

“Creative A vs B”

“Audience X vs Y”

You are testing:

Which signals deserve more budget

Which constraints protect quality while scaling

This is why traditional A/B logic breaks — and why volatility spikes when teams “just test”.